In the ever-evolving landscape of unnatural intelligence (AI), ensuring the seamless incorporation of components is crucial to the overall functionality and dependability of AI systems. Integration testing, some sort of crucial phase inside the software advancement lifecycle, focuses in verifying that various components of a technique work together needlessly to say. For AI methods, which often require complex interactions among various modules, files pipelines, and algorithms, this becomes perhaps more challenging. This article explores best practices for component the use testing in AI systems to guarantee robustness, accuracy, plus efficiency.

Understanding Component Integration Testing

Component integration testing will be the technique of analyzing how individual computer software modules or pieces work together as a cohesive unit. Inside AI systems, these types of components may contain:

Data Processing Themes: Handle the buy, cleaning, and modification of data.

Machine Learning Models: Perform responsibilities for example classification, regression, and clustering.

Feature Engineering Components: Extract and select features related to the models.

APIs and Cadre: Facilitate communication involving different components.

Consumer Interfaces: Interact with end-users and present results.

The goal associated with integration testing is to identify in addition to resolve issues that may not be noticeable during unit assessment of individual parts. For AI systems, this requires verifying the particular end-to-end workflow, data integrity, and discussion between components.

Ideal Practices for Component Integration Testing in AI Techniques

a single. Define Clear The use Points

Before plunging into testing, clearly define the integration details between components. Document the expected interactions, data flows, and dependencies. This quality helps in developing precise test cases and scenarios, minimizing ambiguity during the particular testing phase.

Instance: Within an AI-powered advice system, integration items might include info ingestion from consumer activity logs, feature extraction, and design inference. Documenting these kinds of points ensures of which each interaction will be tested thoroughly.

a couple of. Develop Comprehensive Analyze Cases

Create analyze cases that concentrate in making some sort of range of scenarios, including normal operation, edge cases, plus error conditions. Assure that quality instances reflect real-world use patterns along with the full spectrum of interactions between components.

Standard Operation: Test together with expected inputs in addition to verify the technique performs as essential.

Edge Cases: Test with unusual or extreme inputs in order to ensure the technique are designed for them gracefully.

Error Conditions: Imitate failures or completely wrong data to verify the system’s strength and error managing.

3. Automate Screening

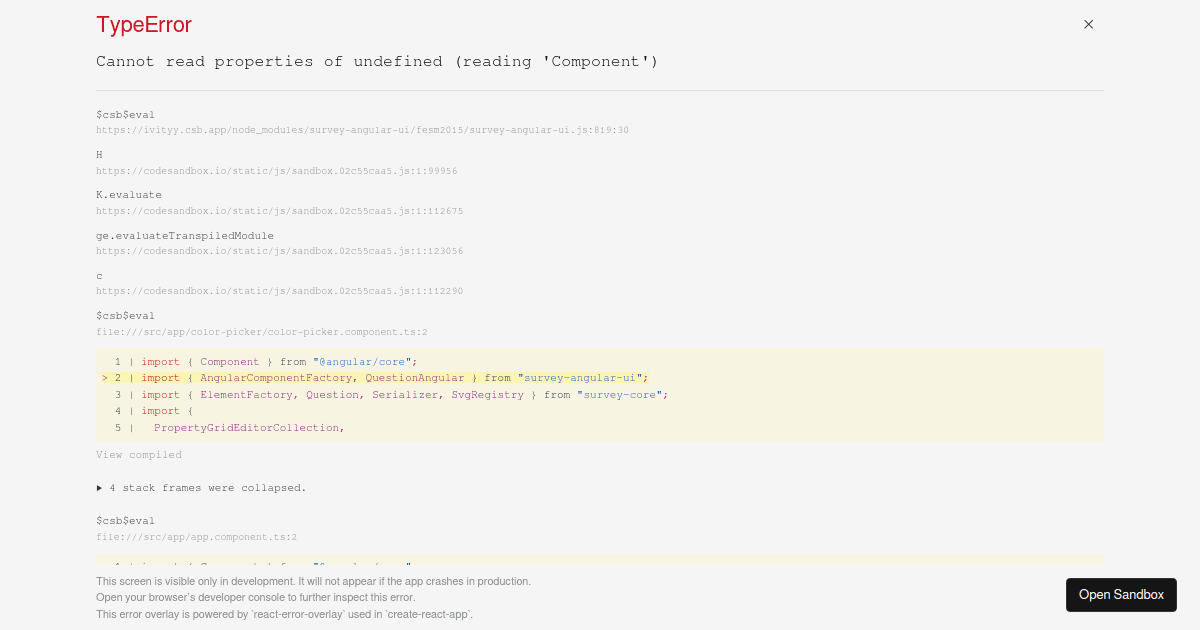

Automate integration testing where possible in order to improve efficiency and consistency. Automated checks can be operate frequently, providing quick feedback on the integration status associated with components. Use resources and frameworks of which support integration testing for AI devices, such as unit testing frameworks (e. g., pytest, JUnit) and continuous integration (CI) systems.

Illustration: Implement automated integration tests for an AI system’s information pipeline to confirm that data is definitely correctly ingested, refined, and fed in to the model.

some. Use Realistic Files

Integration testing throughout AI systems need to use data that closely resembles real-life scenarios. look at this web-site or perhaps mock data may well not always catch the complexities plus nuances of actual data. Incorporate genuine datasets to assure that the mixing tests reflect genuine functioning conditions.

Example: For a natural vocabulary processing (NLP) method, use real text corpora to test how a system grips different languages, dialects, and contexts.

a few. Monitor Data Integrity

Make certain that data sincerity is maintained throughout the integration method. Verify that data transformations, aggregations, in addition to feature extractions are accurate and consistent. Data corruption or loss during the use can lead in order to incorrect model forecasts or system failures.

Example: Inside a pc vision system, validate that image data is correctly preprocessed and passed to the model with out loss of top quality or detail.

6th. Test with Diverse Situations

Evaluate typically the integration of pieces under a number of scenarios to find out potential issues. This includes testing diverse configurations, operating problems, and user inputs to ensure of which the program performs reliably across various conditions.

Example: Test a good AI-powered chat program with diverse user queries, varying amounts of complexity, and different languages to assure robust performance.

several. Validate Inter-component Conversation

Check that communication between components is definitely functioning as predicted. This includes validating that data is usually correctly transmitted, acquired, and processed by simply different components. Work with logging and monitoring tools to track data flows in addition to identify any issues.

Example: For a new recommendation system, confirm that user files is correctly sent from the front-end interface to the backend service plus subsequently processed by the recommendation engine.

8. Perform Regression Testing

Whenever pieces are updated or modified, perform regression testing to ensure that current functionalities remain unaffected. Regression tests aid identify any unintentional side effects caused by changes in one component affecting other folks.

Example: After modernizing a machine learning model, rerun the usage tests to verify that the model’s integration with information processing and feature engineering components nonetheless functions correctly.

on the lookout for. Incorporate Feedback Loops

Establish feedback coils to continuously boost integration testing techniques. Gather insights from test results, customer feedback, and method performance to refine test cases plus scenarios. This iterative approach helps inside adapting to growing requirements and guaranteeing ongoing reliability.

Example of this: If integration tests reveal performance bottlenecks, analyze the fundamental reasons and update test cases to deal with problems in long term tests.

10. Document and Communicate

Thoroughly document the integration testing process, including test out cases, results, plus any issues came across. Clear documentation encourages better communication among team members plus stakeholders, ensuring of which everyone is aligned in the integration status and any required actions.

Example: Sustain detailed records involving integration test outcomes for an AI system’s feature extraction component, including virtually any discrepancies observed during testing and the particular steps taken in order to resolve them.

Conclusion

Component integration assessment is a crucial aspect of making sure features and trustworthiness of AI methods. Using best methods such as defining clear integration points, developing comprehensive test out cases, automating tests, using realistic files, and validating inter-component communication, organizations may enhance the top quality and robustness involving their AI remedies. Integration testing not necessarily only helps in identifying and fixing issues early although also plays a role in the overall success involving AI projects making sure the project seamless interaction in between complex components. Taking on these best procedures will lead in order to more reliable, efficient, and effective AJE systems that meet user expectations and business goals.

Guidelines for Component The use Testing in AJE Systems

19

Ago